If you have already heard of the R language and you take SEOs that use it for aliens, you are not entirely wrong.

Initially intended for data scientists and statisticians, the R language has for some years now landed in unsuspected audiences, and the reason is simple:

Automate actions, retrieve data via APIs and aggregate them, scrape web pages, cross-reference several files (keywords for example) or do text mining, machine learning, NLP and semantic analysis, the possibilities offered by R and its many packages for SEO are many.

However, let’s be clear:

R is not a SEO secret !

But what I do know, however, after several months of use, is that R is revolutionizing the SEO approach by bringing new ideas and working methods.

If you have ever dreamed of creating your own SEO tools, or moving from traditional empirical strategies to a data-driven SEO, you are on your way to becoming an extrateRrestrial too.

Start with R

I started using R from 0, and decided to deepen with a general online training on the famous online training site Coursera.org, since the beginning of 2017.

The Johns Hopkins University Data Science course includes several modules and costs 45€ / month.

I mainly recommend the following courses:

– The Data Scientist’s Toolbox

– R Programming

– Getting and Cleaning Data

After a few weeks of learning at an average of one hour a day, I am now able to save a considerable amount of time in my SEO projects by creating my first scripts.

Many manual, repetitive and often time-consuming SEO tasks are gradually being replaced by R scripts.

Are you interested in this new way of doing SEO? Here you will find everything you need to get started: resources, useful command lines (to be used as an “R cheat sheet“), some R scripts that you can use for your projects and R tutorials for SEO.

Data Science training for SEOs

Data Science training for SEOsIf you want to accelerate your learning of Data Science for your SEO projects, I invite you to register at the next Data Science SEO Training. Please note that technical knowledge are not required to participate in the training.

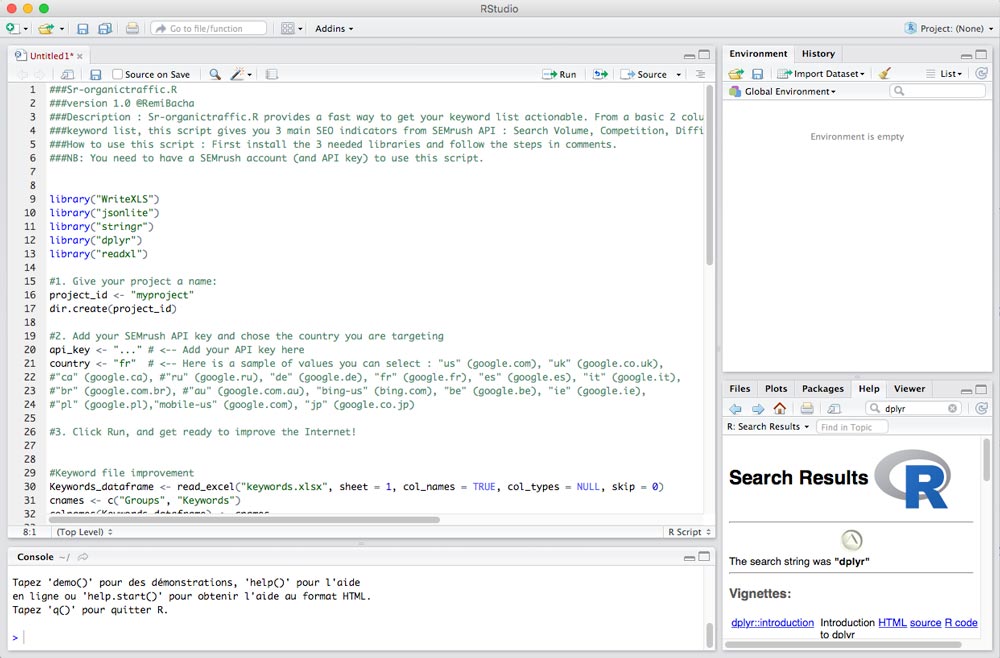

Where to write the R?

Start with:

- Download R

- Install open source and free software R Studio.

Once R Studio is installed, you can test the following R scripts directly in the console (lower left part) or copy and paste them into a new script: File > New File > R Script

Basic functions

sessionInfo() #View environmental information ??read #Help getwd() #View the Working directory setwd("/Users/remi/dossier/") #Set the working directory list.files() #See the contents of the directory dir.create("nomdudossier") #Create a folder in the directory

R Packages

R has a lot of packages (= “functionalities” to download).You will find the list on the cran-r website.

install.packages("nomdupackage") #Install a package

install.packages(c("packageA", "packageB", "packageC"))#Install several packages at a time

#Install a package list only if they are not already installed

list.of.packages <- c("dplyr", "ggplot2","tidyverse", "tidytext", "wordcloud", "wordcloud2", "gridExtra", "grid")

new.packages <- list.of.packages[!(list.of.packages %in% installed.packages()[,"Package"])]

if(length(new.packages)) install.packages(new.packages)

library("nomdupackage") #Load an installed package

?nomdupackage #View package documentation

packageVersion("nomdupackage") #Get the version of a package

detach("package:dplyr", unload=TRUE)#Forcing a package to close without closing R Studio

Here are some of the R packages that you will use most for SEO:

-

- dplyr : Handling data from a dataframe (filter, sort, select, summarize, etc)

- SEMrushR (French) : Use the SEMrush API (my 1st official package, on CRAN !)

- majesticR (French) : Use the Majestic API (my 2nd official package)

- kwClustersR : Cluster a list of keywords

- duplicateContentR (French) : Calculate a similarity score between 2 pages to detect duplicate content

- text2vec : Extract n-grams

- eVenn : Create Venn diagrams (useful for semantic audits)

- tm : Process accents and stopwords

- ggplot : Make graphs

- shiny : Create an real application based on your scripts

- searchConsoleR (French) : Use the Google Search Console API

- httr : Make GET, POST, PUT, DELETE

requests

-

- Rcurl : Also to make requests, more complete than httr

- XML : To parse web pages

- jsonlite : Retrieve json

- googleAuthR : To manage authentication to Google

APIs

- googleAnalyticsR : Working with the Google Analytics API

- searchConsoleR : Download Search Console data into R

- urltools : Perform processing in URLs

Process large volumes of data

The use of data is systematic in any SEO project: whether it is data from Screaming Frog, SEMrush, Search Console, your Web Analysis tool or other. They can come from APIs directly, or from manual exports.

In the following sections you will find tips on how to process these datasets.

Open and save a dataset

mondataframe <- data.frame() #Create a dataframe (allows you to mix digital and text data) merge <- data.frame(df1, df2) #Merge 2 dataframes #Open a TXT file Fichier_txt <- read.table("filename.txt",header=FALSE, col.names = c("nomcolonne1", "nomcolonne2", "nomcolonne3")) #Open an XLS library(readxl) Fichier_xls <- read_excel("cohorte.xls", sheet = 1, col_names = FALSE, col_types = NULL, skip = 1) #Open a CSV Fichier_csv <- read.csv2("df.csv", header = TRUE, sep=";", stringsAsFactors = FALSE) #Save your dataset write.csv() #Create a csv write.table() #Create a txt #Change column names cnames <- c("keywords", "searchvolume", "competition", "CPC") #we define names for the 4 columns of the dataframe > colnames(mydataset) <- cnames #the column names are assigned to the dataframe

Know your dataset better

object.size(dataset) #Get the object size in bytes head(dataset) #See the first lines tail(dataset) #See the last lines colnames(dataset) #Know the names of the columns apply(dataset, 2, function(x) length(unique(x))) #Know how many different values there are in each column of the dataset summary(dataset) #Have a summary of each column of the dataset (minimum, median, average, maximum, etc.) summary(dataset$colonne) #Same thing for a particular column dim(dataset) #Dataset dimensions (number of columns and rows) str(dataset) #More complete than dim() : Dataset dimensions + Data type in each column which(dataset$Colonne == "seo") #Look for the rows in the "Column" column that contain the value "seo".

Focus on the DPLYR package

DPLYR is THE package you need to know. Thanks to it, you will be able to perform many processes in your datasets: selection, filter, sorting, classification, etc.

library("dplyr")

#Select columns and rows

select(df,colA,colB) #Select colA and colB in the df dataset

select(df, colA:colG) #Select from colA to colG

select(df, -colD) #Delete the column D

select(df, -(colC:colH)) #Delete a series of columns

slice(df, 18:23) #Select lines 18 to 23

#Create a filter

filter(df, country=="FR" & page=="home") #Filter the rows that contain FR (country column) and home (page column)

filter(df, country=="US" | country=="IN") #Filter lines whose country is US or IN

filter(df, size>100500, r_os=="linux-gnu")

filter(cran, !is.na(r_version)) #Filter the rows of the r_version column that are not empty

#Sort your data

arrange(keywordDF, volume) #Sort the dataset according to the values in the volume column (ascending classification)

arrange(keywordDF, desc(volume)) #Sort the dataset in descending order

arrange(keywordDF, concurrece, volume) #Sort the data according to several variables (several columns)

arrange(keywordDF, concurrece, desc(volumes), prixAdwords)

Other treatments to know

The following lines are commands that I often use to perform operations in large datasets of keywords, such as SEMrush, Ranxplorer or Screaming Frog exports.

These operations allow me to accelerate my search for SEO opportunities. For example, you can classify keywords into themes, de-duplicate keywords after making a merge from several lists, delete empty cells and divide my datasets into several small datasets, each corresponding to a sub-theme to be exploited.

For Screaming Frog exports, you will find here some commands to count elements such as the number of URLs crawled, the number of empty cells in a column and the number of URLs for each status code.

#Convert a column to digital format keywords$Volume <- as.numeric(as.character(keywords$Volume)) #Add a column with a default value keywordDF$nouvellecolonne <- 1 #create a new column with the value 1 #Add a column whose value is based on an operation mutate(keywordDF, TraficEstime = keywordDF$CTRranking * keywordDF$volume) #create a new column (TrafficEstime) based on 2 others (CTRranking and volume) mutate(keywordDF, volumereel = volume / 2) #Split a dataset into several datasets #Very useful to divide a list of keywords by theme split(keywords, keywords$Thematique)

Web Scrapping and Content Extraction

Creating a crawler is very useful for quickly retrieving specific elements of a web page. This will be used, for example, to monitor the evolution of a competitor’s site: its pricing strategy, content updates, etc.

Scraper XML

With the following script, you can download an XML file, parse it and retrieve some variables that interest you. You will also see how to convert it to a dataframe.

#1. Load packages library(RCurl) library(XML) #2. Get the source code url <- "https://www.w3schools.com/xml/simple.xml" xml <- getURL(url,followlocation = TRUE, ssl.verifypeer = FALSE) #3. Format the code and retrieve the root XML node doc <- xmlParse(xml) rootNode <- xmlRoot(doc) #3.1 Save the source code in an html file in order to see it in its entirety capture.output(doc, file="file.html") #4. Get web page contents xmlName(rootNode) #The name of the XML (1st node) rootNode[[1]] #All content of the first node rootNode[[2]][[1]] #The 1st element of the 1st node xmlSApply(rootNode, xmlValue) #Remove the tags xpathSApply(rootNode,"//name",xmlValue) #Some nodes with xPath xpathSApply(rootNode,"/breakfast_menu//food[calories=900]",xmlValue) #Filter XML nodes by value (here recipes with 900 calories) #5. Create a data frame or list menusample <- xmlToDataFrame(doc) menusample <- xmlToList(doc)

Scraper du HTML

Retrieving links from a page, retrieving a list of articles, these are some examples of what you can do with the following script.

#1. Load packages library(httr) library(XML) #2. Get the source code url <- "https://remibacha.com" request <- GET(url) doc <- htmlParse(request, asText = TRUE) #3. Get the title and count the number of characters PageTitle <- xpathSApply(doc, "//title", xmlValue) nchar(PageTitle) #4. Get posts names PostTitles <- data.frame(xpathSApply(doc, "//h2[@class='entry-title h1']", xmlValue)) PostTitles <- data.frame(xpathSApply(doc, "//h2", xmlValue)) #5. Retrieve all the links on the page and make a list of them hrefs <- xpathSApply(doc, "//div/a", xmlGetAttr, 'href') hrefs <- data.frame(matrix(unlist(hrefs), byrow=T)) #6. Retrieve links from the menu liensmenu <- xpathSApply(doc, "//ul[@id='menu-menu']//a", xmlGetAttr, 'href') liensmenu <- data.frame(matrix(unlist(liensmenu), byrow=T)) #7. Retrieve the status code and header status_code(request) header <- headers(request) header <- data.frame(matrix(unlist(header), byrow=T))

JSON Scraper

#1. Load the package library(jsonlite) #2. Get the JSON jsonData <- fromJSON("https://api.github.com/users/jtleek/repos") #3. Retrieve the names of all nodes names(jsonData) #4. Retrieve the names of all nodes in the "owner" node names(jsonData$owner) #5. Retrieve values from the login node jsonData$owner$login

I hope these few examples of using R for your SEO data processing have made you want to go further! Please share this post on your social networks if you enjoyed it.

The best SEO tutorials with R

Coming soon. Follow me on twitter to be informed of the next update of this article:

Follow @Remibacha

Hi,

your website about:

https://remibacha.com/en/r-seo-guide/

has many dublicate entries for some reason. You might want to check what caused it 🙂

Also thank you for the SEO Tipp. I love it!

Kind regards Kirill

Patched, thanks Kirill 😉